What is Spider

The term search engine spider can be used interchangeably with the term search engine crawler. A spider is a program that a search engine uses to seek out information on the World Wide Web, as well as to index the information that it finds so that actual search results appear when a search query for a keyword is entered. The search engine spider "reads" the text on the web page or collection of web pages, and records any hyperlinks it finds. The search engine spider then follows these URLs, spiders those pages, and collects all the data by saving copies of the web pages into the index of the search engine for use by visitors. Search engine spiders are always working, sometimes to index new web pages, and sometimes to update ones that change frequently. The goal of a search engine spider is to perpetually supply the search engine it belongs to with the most up-to-date material possible.

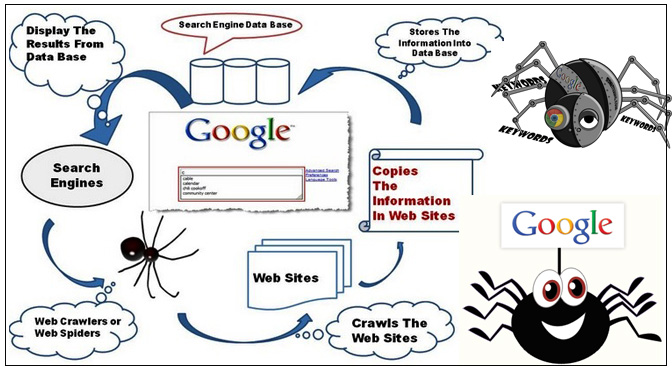

HOW SPIDER WORK

To achieve search engine optimization effectively, web crawlers, web spiders and search engine robots play a vital role. Search engines are the most important elements for finding information on the vast ocean of the World Wide Web. Different users search information on the internet through various search engines, whichever they are comfortable with. But how do these search engines get information, from where do they find so much of information on anything under the sun is a question? Search engines are smart enough to maintain their own database of information.

How do search engines find information?

There are spiders which search engines send to the World Wide Web to find information for us. These web spiders crawl various websites and index them in search engine databases or directories for information searchers to seek information on the net.

NAME OF SPIDER

- Yahoo : Yahoo Slurp

- Google : Googlebot

- Bing : Bing Bot

You can share this story by using your social accounts: